The Evolution of Generative Grammar: From Traditional to Transformational Approaches

Generative grammar fundamentally transforms our understanding of language structure. This approach, initiated by Noam Chomsky, posits that humans possess an innate linguistic capability. The core idea is to explain how language can generate an infinite number of sentences from a finite set of rules. This innovative perspective reshapes our comprehension of linguistic phenomena, distinguishing itself from traditional grammar, which primarily describes language usage without delving into underlying structures. As we explore generative grammar’s evolution, we will highlight its relevance in contemporary linguistics, demonstrating how it has influenced various fields, including cognitive science and language acquisition.

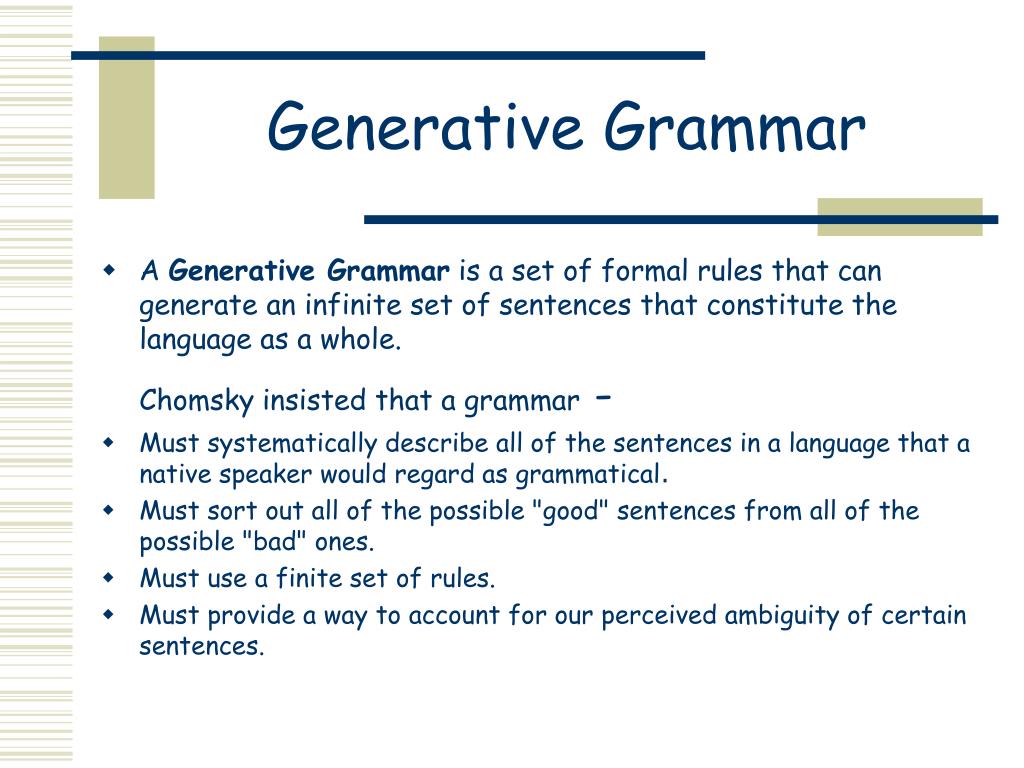

Introduction to Generative Grammar

Generative grammar serves as a pivotal framework in linguistics, shifting the focus from mere description to the exploration of language’s underlying principles. At its core, this approach asserts that humans are born with an innate ability to acquire language. Chomsky’s theories emerged in the mid-20th century, revolutionizing our understanding of language learning and structure. Unlike traditional grammar, which often relies on prescriptive rules, generative grammar seeks to uncover the universal principles that govern all languages. This section will introduce the essential tenets of generative grammar, emphasizing its role in shaping modern linguistic thought and providing a foundation for further exploration into its historical and theoretical developments.

Historical Context of Transformational Grammar

The development of transformational grammar has deep roots in classical linguistic theories, reflecting a significant evolution in our understanding of language. Chomsky’s groundbreaking work in the 1950s marked a departure from previous models that emphasized descriptive rules without considering innate structures. Influenced by the works of linguists like Zellig Harris, Chomsky introduced concepts that would redefine linguistic analysis. This section will explore the historical influences on transformational grammar, examining how structural linguistics and earlier theories laid the groundwork for Chomsky’s innovations. By understanding this historical context, we can appreciate the radical shift that transformational grammar represents in linguistic theory.

Basic Mechanisms of Transformational Grammar

Transformational grammar operates on key concepts of deep structure and surface structure, which are crucial for understanding how meaning is generated in language. Deep structures represent the underlying semantics of a sentence, encapsulating its fundamental meaning, while surface structures are the actual spoken or written forms that convey that meaning. This section will delve into the mechanics of how transformations convert deep structures into surface forms, using examples such as subject-auxiliary inversion. These transformations illustrate the dynamic nature of language and highlight the complexity involved in linguistic expression. By examining these mechanisms, we gain insight into the intricate relationship between meaning and form in generative grammar.

Core Concepts: Competence vs. Performance

One of Chomsky’s significant contributions to linguistics is the distinction between linguistic competence and performance. Competence refers to a speaker’s innate knowledge of their language, including the rules and structures that govern it. In contrast, performance involves the actual use of language in real-life situations, which can be influenced by various factors like memory limitations and social context. This section will discuss the implications of this distinction for linguistic research and pedagogy, emphasizing its relevance in understanding language acquisition. By distinguishing between competence and performance, linguists can better analyze the complexities of language use and the cognitive processes underlying language learning.

The Role of Universal Grammar

Universal grammar is a central tenet of generative grammar, suggesting that all humans share an innate linguistic framework. This concept posits that despite the vast diversity of languages, there are fundamental similarities in their structures, driven by shared cognitive capabilities. This section will analyze how universal grammar accounts for these structural similarities across different languages, shedding light on the principles that govern language formation. Additionally, we will explore how this framework informs language teaching methodologies, emphasizing the relationship between principles and parameters. Understanding universal grammar is essential for grasping the broader implications of generative grammar in both theoretical and practical contexts.

Advances through Transformational Generative Grammar

Transformational generative grammar introduced several key improvements over traditional grammar, significantly advancing our understanding of linguistic structures. This section will outline these advancements, including a heightened focus on grammaticality, the introduction of transformations, and the formalization of grammar through mathematical precision. By emphasizing the importance of grammaticality, Chomsky’s approach highlights how sentences can be analyzed based on their structural integrity rather than mere acceptability. These innovations have reshaped linguistic theories, providing tools for analyzing complex language phenomena and advancing our understanding of syntax and semantics.

The Minimalist Program: A New Approach

Chomsky’s minimalist program emerged as a response to the complexities of earlier theories, aiming to streamline the principles and parameters of generative grammar. This approach seeks to simplify our understanding of language by focusing on economy and efficiency in linguistic structures. This section will explain the minimalist approach, exploring its core ideas and how it redefines the study of generative grammar. By prioritizing simplicity, the minimalist program challenges linguists to reconsider the assumptions underlying previous models, offering a fresh perspective on language’s intricacies. This shift not only affects theoretical linguistics but also has practical implications for language teaching and cognitive science research.

Criticisms and Challenges to Generative Grammar

Despite its significant influence, generative grammar faces various criticisms from both within and outside the field. Internal critiques often focus on the lack of consensus regarding parameters and the complexity of defining universal grammar. Additionally, inter-paradigm criticisms arise from functionalist and cognitive perspectives, which argue for a more usage-based understanding of language. This section will explore these critiques, addressing the challenges generative grammar encounters in language instruction and research. By examining these criticisms, we can better appreciate the ongoing debates surrounding generative grammar and its place in contemporary linguistics.

Conclusion: The Future of Generative Grammar

The evolution of generative grammar continues to impact linguistic theory and teaching practices, shaping our understanding of language in profound ways. This section will summarize the key points discussed throughout the post, reflecting on the future directions for research in generative grammar. As linguistics evolves, ongoing debates will likely influence how we integrate new findings and adapt our theories. Understanding the historical context and theoretical developments that shape current linguistics is crucial for anticipating the future of generative grammar. By embracing this dynamic field, researchers and educators can better navigate the complexities of language and its acquisition, ensuring that generative grammar remains a vital area of study in the years to come.